As most of us know, or at least have heard, user expectations for applications are at an all-time high. For mobile and web applications, they border on astronomic. Yet, many companies are still failing to achieve the quality that users expect.

As most of us know, or at least have heard, user expectations for applications are at an all-time high. For mobile and web applications, they border on astronomic. Yet, many companies are still failing to achieve the quality that users expect.

Often, consumer application developers are caught in a “perform or die” scenario and are forced to place greater emphasis on quality, at least initially. However, developers of enterprise or in-house applications frequently have a “built-in” customer base and may be less likely to give quality its due.

This is unfortunate, because poor application quality—as defined by problems such as inadequate functionality and lack of intuitiveness—will reduce workplace productivity and user acceptance, even though personnel are technically “using” the application.

In late 2015, Forrester released the results of a study (1,000 consumers, enterprise application initiative leaders, and application professionals regarding mobile applications) that delved into the criteria for a great app. Its findings align closely with my own opinion and the corporate position here at Orasi, so I will use its statistics throughout this discussion.

What Makes an App Great?

Forrester identified four key findings in its report:

- A great app not only works flawlessly; it also provides immediate and relevant mobile moments.

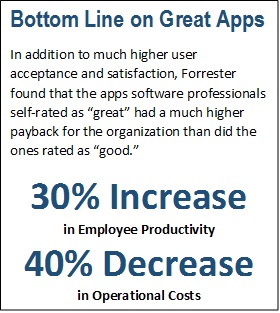

- Great apps increase revenue, reduce cost, and engage customers exponentially more than good apps.

- Firms must invest to create great apps.

- Firms must measure the things that will lead them to create great apps.

Although “providing immediate and relevant mobile moments” is mobile specific (and beyond the scope of this discussion), I submit that every other finding rings true for all applications, whether mobile, web, or enterprise. However, number four, which is the only finding that deals with process, only hints at what firms must do to achieve application greatness.

Measuring the things that are important is absolutely critical, but firms must also use that information effectively by incorporating it into a meaningful quality system that cannot be disrupted by corporate priorities, from demand for faster release cycles to budgetary restrictions.

Measurement is only of value when its information is productively used to increase quality—to improve a product in ways that are verifiable from the user’s perspective.

New Models Raise Old Problems

One of the great ironies for the pursuit of quality is that new approaches, from agile to continuous delivery and DevOps, can become part of the problem. Organizations recognize that users want better quality, sooner, so they adopt agile methods to accelerate release cycles. They understand that deferring testing to the end of a release is a broken approach, so they move toward a continuous delivery model.

One of the great ironies for the pursuit of quality is that new approaches, from agile to continuous delivery and DevOps, can become part of the problem. Organizations recognize that users want better quality, sooner, so they adopt agile methods to accelerate release cycles. They understand that deferring testing to the end of a release is a broken approach, so they move toward a continuous delivery model.

However, unless the organization completes fundamental shifts that must accompany any end-to-end quality initiative, the effort is likely to be doomed. These shifts include:

- Realigning corporate priorities to make quality an imperative, with a functional oversight (measurement, evaluation, and adjustment) process.

- Refining the application development lifecycle to monitor, measure, and address not only development and testing (e.g., defect) data but also data collected through production-side user and application monitoring.

- Embracing the reality that speed without quality is a recipe for disaster.

These changes take time and effort, and it’s often easier to decide, “let’s change the development and testing model first, then work out the leftover quality kinks later.” This is putting the proverbial cart before the horse, because moving to a different delivery model will not automatically alleviate dysfunction. In fact, it will often make it worse.

Still, companies that are already engaged in or have adopted agile or continuous approaches definitely do not need to revert to waterfall or V-model in order to improve quality. They can integrate quality initiatives over time, provided they have a well-developed plan for achieving this goal and can designate quality-focused team members (or hire consultants) to concentrate on that effort. I worked with a large financial services organization that implemented continuous integration, continuous delivery, and continuous deployment very successfully—largely because the entire effort was led by the quality division.

Performance Really Is King

Another issue in achieving quality and application greatness, especially for mobile applications, is performance. A brilliantly designed application is worthless if it doesn’t perform, yet I frequently see organizations allot minimal time and effort for performance engineering or even performance testing.

Furthermore, users are becoming more cognizant of, and focused on, performance-related application functions, especially for mobile apps. In the Forrester study mentioned earlier, the top five user criteria for a great app were:

- Does not crash/freeze/display an error (55%)

- Does not suck down my battery or take up a lot of memory (50%)

- Saves me time (45%)

- Sets my privacy settings to my preferences (42%)

- Gives quick access to features I use most (39%)

Most of these relate, at least in part, to performance. Users are becoming savvy enough to check their battery usage monitors and see that a particular app is draining battery life. They are cognizant of privacy settings and want applications to adapt to them automatically.

A decade ago, must mobile device users didn’t even know what privacy settings were, and they had no idea what caused their battery to drain other than usage. Today, they will reject applications where performance quality is lacking—and statistics show they will share that dissatisfaction with others. Application developers must be cognizant of this sophistication when they consider app performance.

The End Game

The two issues I’ve covered today are illustrative of the impediments that can stand in the way of application quality, but they are not the only ones. Organizations that want to attain the pinnacle of greatness for their applications must be ever vigilant regarding both problems and opportunities.

If they haven’t done so already, they must establish quality teams (and preferably divisions) whose role goes well beyond reading defect reports and determining whether or not testing is catching enough of them. These team members must become engaged with quality at every level—from user stories through production monitoring and back.

As food for thought and future discussion, the following are a few quality-focused questions to ask yourself and your team. If the answer is no, dig deeper.

- Is system uptime in production meeting goals, and are those goals high enough?

- Are you failing earlier rather than later?

- Are you incorporating sufficient testing activities (with sound testing practices)?

- Are you receiving production-side feedback, and is that information meaningfully incorporated into user stories? Do code updates reflect that input?

- Are you taking advantage of automation where you can?

- Are you and your team learning from mistakes of the past?

When true end-to-end quality is built into the software development lifecycle, processes flow more smoothly, problems are less prevalent, and both team members and users are more satisfied. Once quality initiatives succeed in propelling applications to the level of greatness, the results are even more amazing.