How can this be? Testing without requirements, a cornerstone of quality? Conducting functional testing with documented requirements is commonplace and expected. Software testers need pass/fail criteria when grappling with features and functionality. However, system and application performance evaluation  is more subjective and the criteria used to judge a good or bad performing system under test can be slippery and elusive.

is more subjective and the criteria used to judge a good or bad performing system under test can be slippery and elusive.

I have been involved in numerous projects without the benefit of non-functional requirements. I suspect this happens mostly because the project sponsors just don’t know what to provide in this area. Sometimes system/application performance requirements lie submerged, because the project stakeholders are so focused on project deadlines and stuffing features and bug fixes into the application while working under duress that they haven’t taken the time to think about performance requirements.

Fight, Flight, or Talk It Out

Test plans beg for requirements—so should agile sprints when performance testing is in scope. The plans/sprints should cite pass/fail criteria based upon sound requirements crafted by group consensus. Prior to starting your testing, it’s better to have 1) goals (these can be subjective), 2) expectations (subjective), and 3) requirements (these are more objective and metrics-based). But, sometimes you’ve got to reach for them.  You’ve heard of the fight-or-flight syndrome? We testers are intelligent beings, and we have another option—negotiate. If you have been assigned to a performance testing project bereft of requirements, you could throw up yours hands and retreat. If you do, just be sure to accept the consequences of giving up.

You’ve heard of the fight-or-flight syndrome? We testers are intelligent beings, and we have another option—negotiate. If you have been assigned to a performance testing project bereft of requirements, you could throw up yours hands and retreat. If you do, just be sure to accept the consequences of giving up.

On the other hand, if you have the moxie and feel confident of your political clout, then fight back and insist that the project sponsors enlighten your efforts with something resembling success criteria for your testing.

In the absence of feedback and constructive input from those charged with this responsibility, you have work to do. The first challenge is to identify those responsible and elicit their system/application performance goals, expectations, and non-functional requirements.

If requirements are still not forthcoming and you elect to stay the course, state in your test plan or formal communications your predicament in order to protect yourself. Concede that you are in exploratory mode and, for now, have committed yourself to waive formal requirements. You’ll have to create makeshift requirements and goals. (I’ve got some examples later in this blog as a start.)

At a minimum, document what you are going to do during your performance testing effort. After test execution, report your findings objectively. Avoid being judge, jury, and executioner because too much analysis might come back to bite you later by someone whose job security is as shaky as the application being tested. Your task should be to determine the performance-related status of the system under test within the constraints of the tools and resources given to you, including the test environment and the state of the code at the time of testing.

If you’re not getting good feedback, but you suspect the reason is ignorance on the behalf of your project sponsors and not because of stonewalling, then extend the olive branch and strive to work with those who are willing. Use your experience and knowledge to forge a set of requirements that, hopefully, are acceptable to the sponsors.

If you propose ideas for a set of “straw-man” requirements and you find one or more of the project sponsors challenging your suppositions, then you’ve put the ball in motion. And, if one or more of the project sponsors begin to argue among themselves as to what constitutes acceptable pass/fail criteria, then take a moment to gloat. You’ve earned the role of requirements facilitator.

The last choice is, unfortunately, to walk away, but be sure to alert your manager of your dilemma.

Test Reporting Basics – Size, Speed, and Rate of Change

If you are forced to construct your own performance testing requirements, then strive to provide basic information following the conclusion of your performance tests. Your testing goals should produce, at a minimum, the following results for the system under test:

- The ability of the system under test to support X number of concurrent users/connections

- At what speed was the work done? What were the response times?

- What was the work throughput given the variable test conditions (connections, transaction loads, test duration, etc.?)

Your makeshift testing goals should address the major topics of size, speed, and rate of change. Your test plan should pledge to provide the following:

- How many system resources were consumed during the test compared to a quiet, steady state in your test environment? What was the size of your loads (# of users/connections)?

- How fast were the units of work/transactions processed?

- How quickly did things change during testing that could be attributable to varying the load applied during testing? What was the slope (rate of change) for system consumption and response times in the graphs produced during your testing? The slope or rate of change uncovered during testing may be a useful metric in the creation of a performance requirement.

Do the Math

Is the volume of work units known? For example, 100 insurance policies created per hour? Can the number of users using the system at peak time be determined? For example, 500 users hitting the application on Friday at 4:30 p.m. PST?

Here’s a breakdown example: Fourteen hours of prime-time application usage per day, 1000 total users estimated to be eligible to use the application, and 5000 records estimated to be produced per day on the average. Multiply an hourly throughput by an arbitrary coefficient (let’s say 3) to get a “peak” or target load. Using my hypothetical numbers:

5000 records divided by 14 hours daily usage = 357.1 records produced per hour average

Formulate a peak load by multiplying the average (357.1) by 3 to give 1,071.4 records in one peak hour. Try to determine how long it would take an average user of the application to create one record of say 15 minutes. Thus, assume 4 records per hour per person. 1071.4 records produced in 1 hour divided by 4 records/hour per person yields 267.9 users needed to produce the desired work load during a peak hour. Assuming your performance test harness has the necessary wherewithal such as software user licenses and the hardware to support the load, then you’ve got yourself a serviceable load testing scenario.

Comparables

Consider this real estate metaphor. If the value of your home can be determined by studying comparable homes (comps) within your neighborhood, then perhaps expectations for response time and system resource consumption could be derived by researching how other applications are performing. You might be inclined to ignore anecdotal evidence to build your set of requirements, but when your project sponsors shirk their duties and you need benchmarks for justification, try looking afield for your performance testing “comps.”

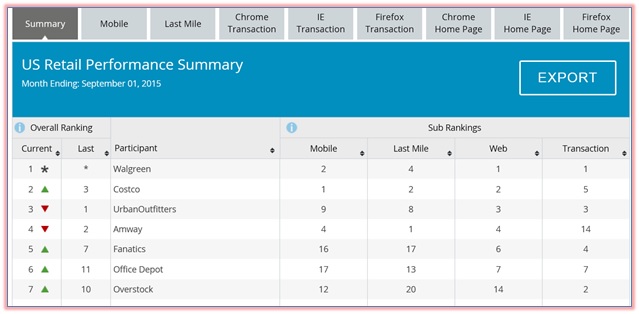

Here is a snapshot from Dynatrace’s mobile and website performance benchmarks web site that can give you some industry “comps.”

Unfortunately, when it comes to assessing system resource consumption (CPU, memory, etc.), there are no industry standards that I know of. You’re going to have to work with the system engineers and architects to make the judgement call as to whether the resource consumption observed during testing passes muster. I would compare test results 1) using one unit of work, 2) a medium-sized test using more units of work, and 3) a larger-sized test producing even more units of work. Use this set of test results and determine the delta (rate-of-change) for each test’s metric in question to see if the differences are linear or if they approach the proverbial vertical “hockey stick” effect.

Response-Time Limits

Using industry-standard response time limits might prove helpful in establishing your performance requirements. Human factors studies done years ago [Miller 1968; Card et al. 1991] can still provide a measuring stick for human-computer interaction. The following three response-time limits, presented by the Nielsen Norman Group, hold true now and can help construct expectations for your project’s application. Of course, surveying the end-user community would supersede this method. But again, we are dealing with a short deck here with no a priori requirements.

- 1 second gives the feeling of instantaneous response. That is, the outcome feels like it was caused by the user, not the computer. This level of responsiveness is essential to support the feeling of direct manipulation (one of the key GUI techniques to increase user engagement and control).

- 1 second keeps the user’s flow of thought seamless. Users can sense a delay, and thus, know the computer is generating the outcome. But, they still feel in control of the overall experience and that they’re moving freely rather than waiting on the computer. This degree of responsiveness is needed for good navigation.

- 10 seconds keep the user’s attention. From 1–10 seconds, users definitely feel at the mercy of the computer and wish it was faster, but they can handle it. After 10 seconds, they start thinking about other things, making it harder to get their brains back on track once the computer finally does respond.

What, No Test Cases Either?

What if you have no test cases provided by your project sponsors? Then, ouch! You are really having to wing it. Here are two quick-and-dirty performance scenarios I would construct under such circumstances:

- Simply provide a slow, incremental ramp up of connections established to the system under test, and stick your thermometers into the servers to measure 1) system resource consumption (CPU, memory, disk I/O, and network utilization), 2) the speed at which the connections are established, and 3) a count of connections that fail or incur timeouts compared to the total attempts at connecting. As an example, consider a test case where the application user simply logs in, pauses, logs out, and repeats the process. I know from experience that establishing multiple authentications within an application can put the system under test through some strain.

- Another quick-and-dirty scenario to use in lieu of genuine test cases is what I call “touch all services.” If you are working with an application with a tiered menu structure or one that offers a palette of services accessible via your performance testing harness, then create a test case that simply invokes a wide range of components or modules contained within your system under test. Case in point—imagine signing into an application and canvassing the accessible menu options many of which will invoke separate code groups. Is this an in-depth load testing scenario that replicates real users in a real sense? Certainly not. But, this scenario can be used as an alternative to not having “real” test cases provided by those who with more subject matter expertise.

The Take Away

When you step up and carve out performance testing requirements where none existed, you’ll show your mettle as someone who provides value as a creator and developer of technical assets. So, don’t despair if you find yourself having to do this. Make yourself shine!