We were working on a project that required us to bring everything under version control to facilitate continuous delivery. Our team came up with a solution for the performance testing elements. The key was to include more than just the scripts. We needed a design for everything to reproduce the test runs and store results for base-lining, troubleshooting, and analysis.

We included a Z_ReadMe.Txt (or .doc, .rtf) at each directory level to reference and explained what each folder should contain.

After setting up this structure and importing it into subversion, we can track both incremental changes as well as issue a major version to correspond with a release. This way, the data, scripts used, scenarios created, results, and all performance activities done can be preserved and recovered. In our next step we will set up a trigger from the automated build process to retrieve our performance scripts and kick off a smoke test. We will do this using HP LoadRunner via command line options. Then the results will be automatically parsed into a dashboard such as SonarQube or Integrity.

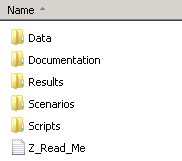

Here is our current design. I’m curious about any questions or suggestions.

2. Data folder

All data related artifacts are stored in this folder. They include:

a. Input Data Files

b. LoadRunner Data Scripts

c. SOAP UI Data Creation Projects

d. A ReadMe file detailing creation and what scripts the data was used in

3. Documentation folder

This is where any supporting documentation is stored. This could include:

a. Test Plan

b. Test Strategy

b. Infrastructure documents

c. Performance Requirements documents

d. etc.

4. Results folder

This is where all of the LoadRunner results artifacts are stored for this project. They include:

a. LoadRunner Raw Results

b. LoadRunner Analysis Results

c. LoadRunner HTML/Word/PDF/etc. Results

d. Any other miscellaneous results files such as Excel documents, networking team

generated results, infrastructure team generated results, etc.

e. A ReadMe file detailing the results files and folders

f. Run log

Note: It is not necessary to archive all results. Only the final results are required.

5. Scenarios Folder

This is where all of the LoadRunner scenarios are stored for this project. It is not necessary to archive all scenarios. Only the scenarios to support the final test results are required.

6. Scripts Folder

This is where all of the LoadRunner scripts are stored for this project. It is not necessary to archive all scripts. Only the scripts to support the final test scenarios and results are required.

Charles Boettger, Consultant of Managed Performance Testing at Orasi Software