The Bell X-1 is the historic US plane credited for breaking the sound barrier. The “sound barrier” is a term coined to represent the belief that man and aircraft could not travel faster than the speed of sound. Stemming from the perception that drag (air-resistance and friction), which dramatically increases as you approach the speed of sound, would prevent the plane from achieving supersonic speeds and ultimately end in catastrophic destruction of pilot and plane. Through applied engineering, the US advanced in knowledge and depth of understanding as to how to counteract the effects of drag in aeronautics. Air travel advanced to the point that Mach 6 (6x speed of sound) is a reality for manned aircraft, shattering the so called “sound barrier”.

For many IT shops, the same can be said about application delivery velocity and the “(speed of) agile barrier”. This barrier keeps software shops from leveraging and benefiting fully from Agile, holding them back from producing at the speed of agile. Most shops have good reason to believe a barrier exists as Software Development Life Cycle or SDLC drag is real and is created by mindsets matured in a legacy waterfall shop, inefficient processes, insufficient execution strategies, and pre-existing technologies optimized for phases, division of labor, hand-offs and governance (organizational inertia).

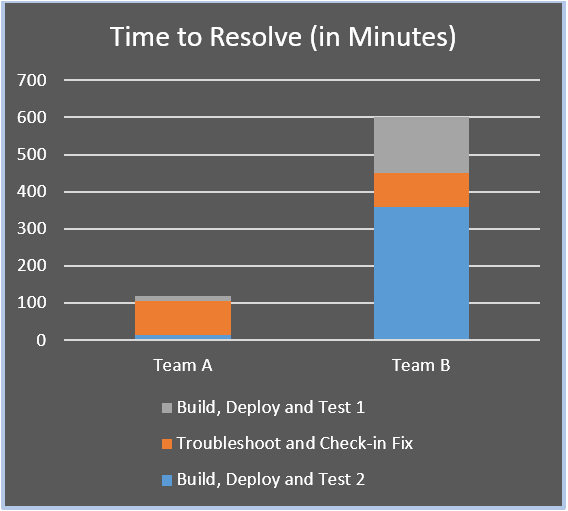

The single most important key to increasing delivery velocity beyond the barrier is addressing the cadence of the feedback loop (as measured by mean time to x). There are many feedback loops in software delivery. Below is a simple example of one:

Team A initiates a build that triggers auto deployment and test execution, within minutes it becomes clear that, although the build is not broken, the application functionality is severely degraded. The time to discover for Team A = 15 minutes. Team A spends 90 minutes, exactly the same amount of time as Team B, troubleshooting and checking in a fix. The combined time for the second build, deploy and test cycle = 15 minutes. The majority of the tests pass and the build is approved for further evaluation. The time to resolve the degrading issue introduced in the original build was 120 minutes (2 hours).

Team B initiates a build and sets a tracker status as ready for deployment, assigning it to deployment team lead. The deployment team lead is out this afternoon at the dentist, so the tracker sits until morning. He checks his email after hours from home and decides to deploy the build from home and sets the tracker as ready for test and assigns to test manager. The test manager promptly runs the smoke test automated suite and they fail across the board. The test manager suspects the deployment team forgot to restart the web services application tier, so she logs into the monitoring interface and sure enough the web service is not running. Test manager promptly updates the tracker and assigns the ticket back to deployment, following up immediately with a phone call to the deployment team lead to speed things along. The deployment team starts the services and assigns the ticket back to test team as ready for test. It was important to reassign the tickets formally because the management for Team B tracks and monitors rework closely and managers get upset if the Team does not follow the defined workflow. The test manager fires off the automated test suite and finally discovers the existence of the degrading issue in the build. The time to discover for Team B = 360 minutes. Team B spends 90 minutes, exactly the same amount of time as Team A, troubleshooting and checking in a fix. The new build follows a similar path as before. We experience wait because development assigned the tracker ready for deployment right before lunch. The post lunch deployment was clean with no rework, but, the test manager had a lunch meeting and is just now getting her run in. Thankfully, she does not have any afternoon meetings, so upon return she fires off the automated tests, which pass, and she approves the build for further evaluation. The combined time for the second build, deploy and test cycle = 150 minutes. The time to resolve the degrading issue introduced in the original build was 600 minutes (10 hours).

On average, Team B loses an entire business day to the feedback loop compared to Team A, per similar incident. At minimum, Team B loses 3 hours to the feedback loop compared to Team A, per deployment. Given these numbers, Team A will build, deploy, and test more often. Team B, well, they are in a meeting right now with their project manager brainstorming on how to get the project back in the green.

Boyd’s Law of Iteration, developed by USAF Col. John Boyd, in a setting where lives were on the line, states that it’s not observing, orienting, planning and acting better, rather, it’s observing, orienting, planning and acting faster that provides a competitive advantage. Boyd’s Law of Iteration = speed of iteration beats quality of iteration. In closing, breaking the speed of agile barrier without setting the stage for a catastrophic outcome, requires addressing the cause to the effect as to why we cannot iterate rapidly, by shrinking the feedback loops and moving them to the earliest possible point in the process.