When an application under test (AUT) produces less than satisfactory results, the first question a customer will inevitably ask is “What went wrong?” Closely followed by, “Are you sure these results are correct? What about the scripts? Are they working properly?”

And so it begins.

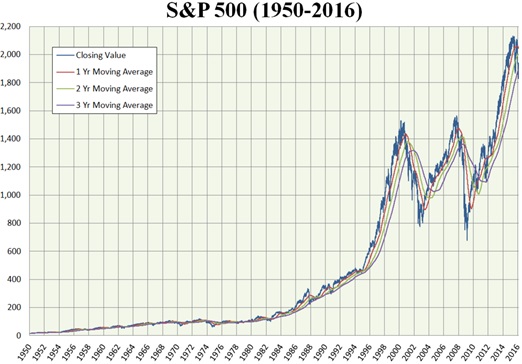

From a performance engineer’s perspective, this is a fairly common scenario and one that I’ve found myself in on numerous occasions. No one, especially developers, wants to believe their application might be the culprit and the reason why their average transaction response time graph resembles the S&P 500 index.

*If your application response times look like this, you might have a problem.

Instead, the onus is now firmly on the performance team to prove that it’s the AUT that’s at fault and not the testing tool or the engineer who dropped the ball.

Of course there are times when an engineer might make a mistake. A runtime setting gets overlooked, a correlation is missed or wrongly captured, a parameter setting incorrectly configured. These mistakes are usually obvious and exposed early on during the shakeout stage.

Green Doesn’t Always Mean Go!

But what if your script compiles without error and not only compiles, but replays successfully? Is this a guarantee that the application is working as expected? In short, no, and it’s a dangerous assumption to make. Just because the summary result shows a green checkmark doesn’t mean you’re in the clear.

* This is NOT a guarantee your application is working.

Something that happens way more than it should is the failure by engineers to include sufficient error handling and content checking in their scripts.

I recall an instance where a client had been conducting their own internal performance testing for some time. Every release and subsequent test passed spectacularly. Unfortunately, and unbeknownst to them, the application was actually generating multiple errors during the test.

The scripts in question would continue until completion, despite the previous errors. Of course, there were other red flags that should have raised questions as to the integrity of the test, but this is just an example.

In this case, a simple web_reg_find statement or use of the Content_Check feature within VuGen could have avoided this and saved a lot of embarrassment for everyone concerned.

In the coding example below, we use the web_reg_find function to determine whether a web page loaded successfully or not. The code searches for the text “Congratulations” as part of the HTTP response. It then uses IF ELSE logic to determine what happens next. If the text isn’t found, the transaction fails, the iteration stops, and a new iteration starts. If it finds it, the transaction passes and the script continues.

Action()

{

web_set_user("{pUsername}", "{pPassword}", "{pTarget}:{pPort}");

web_reg_find("Text=Congratulations","SaveCount=tValid",LAST);

lr_start_transaction("PEAK_T010_GetPSID");

web_custom_request("SubmitRequest_GetPSID",

"URL=https://{pTarget}:{pPort}/api/employees/psid",

"Method=GET",

"Resource=1",

"RecContentType=application/json",

"Referer=http://localhost:3000/",

"Snapshot=t66.inf",

LAST);

if (atoi(lr_eval_string("{tValid}") ) == 0 ){

lr_end_transaction("PEAK_T010_GetPSID",LR_FAIL);

lr_exit(LR_EXIT_ITERATION_AND_CONTINUE, LR_AUTO);

}

else {

lr_end_transaction("PEAK_T010_GetPSID",LR_AUTO);

}

return 0;By using this approach, we can verify that each page has loaded as expected and continue accordingly. This is just one of a number of ways a performance engineer can make a script more robust and less prone to reporting erroneous results.

DO

- Include error handling in your script.

- Include content checks (using web_reg_find or via runtime settings).

- Make use of the lr_exit function to control how your script should exit in the event of an error.

- Make use of the continue_on_error function to determine how HPE LoadRunner behaves in the event of an error.

Which brings me to this final thought. Gaining a client’s confidence can take time. It’s quickly and easily lost when an engineer cuts corners or fails to follow best practices. Make sure you don’t make the same mistake.