In the book, Continuous Delivery, authors Jez Humble and David Farley described a mindset, process, and ultimately a solution that has transformed the delivery cycle time and level of quality across the IT world. In my opinion, continuous delivery (CD) formed the technical practices that buoy up the DevOps movement. The improvements in tools, automation, and process—which CD vendors and practitioners have championed—has also raised the tide and thus other boats in the software engineering space.

In the book, Continuous Delivery, authors Jez Humble and David Farley described a mindset, process, and ultimately a solution that has transformed the delivery cycle time and level of quality across the IT world. In my opinion, continuous delivery (CD) formed the technical practices that buoy up the DevOps movement. The improvements in tools, automation, and process—which CD vendors and practitioners have championed—has also raised the tide and thus other boats in the software engineering space.

At the same time, we have seen significant improvements in the way virtualization and cloud solutions transform performance and scalability. This has come at the same pace as innovations in CPU, flash storage, and mobile network technology. Plus, there have been even further wins in application performance monitoring that take advantage of managed code environments, SaaS, improved statistical analysis, and more. All of these together prompted me to generate a performance maturity model akin to the CD maturity model created in the aforementioned book.

Performance of software solutions is a complex space, and like any author, I am proposing a model and requesting feedback. I submit the following categories for consideration:

- Solution Design

- Environments and Simulation

- Goals and Requirements

- Team Collaboration and Process

- Monitoring and Analysis

Solution Design

An architecture and its underlying algorithms, which make up the solution design, have the most dramatic effect upon the performance and scalability of a software solution. If you think about it, there are some software tools running today that still perform and scale on CPU and server architecture that is more than 30 years old. These solutions still meet their requirements because of the quality of their initial design. In contrast, we have also witnessed newly designed solutions whose developers failed to consider the simple laws of quantitative analysis and the speed of light. These designs were done without ever doing the math, counting the round trips, or even calculating memory or storage costs.

Thus, I present the first table.

| Level 4: Optimizing |

|

| Level 3: Managed |

|

| Level 2: Defined |

|

| Level 1: Repeatable |

|

| Level 0: Initial |

|

Guiding Principles

TOGAF introduced me to the concept of guiding principles. In essence, this is a prioritization of attributes which helps guide a development organization. For instance, the architectural guiding principles would prioritize an e-commerce solution for airplane parts in the following way:

- Security

- Robustness

- Data accuracy

These principles would help the development team make decisions for selection of operating systems and database technology. Thus the team could more easily weigh the decision to incorporate a new UI technology compared to investing in a monthly penetration test.

In contrast, a new solution that tracks the number of likes/dislikes for your photo montage would have the guiding principles for:

- Performance/scalability

- Quickness of new design changes

- Minimized hardware costs

This solution stack should stay on top of prototypes and designs that can drive down costs and improve scalability. The robustness of the data is secondary to the short-term value proposition of social media.

Prototyping

Computer systems are the lowest cost and most easily prototyped solutions you can build. For this reason, mankind has improved the performance and other characteristics at a rate unsurpassed within any human endeavor. Simply put, we can test quickly, cheaply, and accurately. So, in order to improve the performance of a solution beyond simple tuning parameters, the design and development team must prototype solutions that model the performance of the targeted goals. If you talk or meet with the solution teams behind the most important software solutions of our era, they can tell you about the countless whiteboard designs that failed during prototypes.

Imagine strapping your business into a rocket ship that was designed entirely on paper without an intermediate prototype and validation. Or similarly, they give you a tractor trailer to compete in Le Mans with three sets of tires to choose from in order to maximize performance.

Measurement and Monitoring

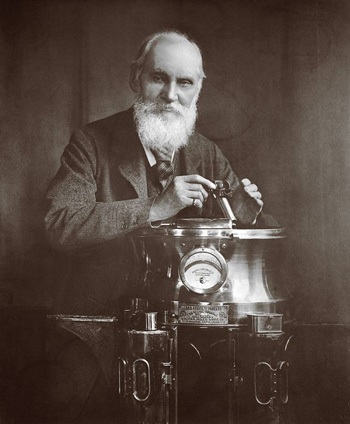

This quote from Lord Kelvin never gets old.

This quote from Lord Kelvin never gets old.

“I often say that when you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meagre and unsatisfactory kind; it may be the beginning of knowledge, but you have scarcely, in your thoughts, advanced to the stage of science, whatever the matter may be.”

In the context of a solution design for performance and scalability, the team should inject measurement points into the solution or work with a measurement framework that provides transparency into the validation. For instance, during the design of the Windows server systems solutions, we added performance counters that tracked the queue depth, latency, and throughput for the key interactions within the systems. This provided clarity to the operators of the solutions down the road and enabled them to modify and optimize their implementations.

In an open source/Java solution, the decision would be to create log4j2 output that is easily digestible by Splunk. Or, to leverage a threading framework that doesn’t overly complicate the resource analysis for tracing workload impact within a component. In other words, design solutions should validate the component with the target production monitoring solution before the change is too late.

Automated Build Acceptance

One of the most concrete elements of CD practices is automation of the build, deployment, and subsequent validation. This cycle of automation captures changes to the expected behavior of the solution so quickly that you could call it automatic defect prevention. The key concept within the solution design relative to performance is to enable this into the engineering practices for acceptance of a build/change into the continuum of changes that create a final software solution.

One of the most concrete elements of CD practices is automation of the build, deployment, and subsequent validation. This cycle of automation captures changes to the expected behavior of the solution so quickly that you could call it automatic defect prevention. The key concept within the solution design relative to performance is to enable this into the engineering practices for acceptance of a build/change into the continuum of changes that create a final software solution.

There are many automation tools that are both free and/or with enterprise level support that can provide repeatable and trustworthy results. This addition will minimize the risk of an unknown or unplanned performance risk to a change. The cost of this check has become very low, so if performance is a key guiding principle, then the addition of this process to the development process is a very low cost boon relative to the costlier impacts of debugging or troubleshooting a design which requires a longer and slower test that must consider a broad scope of changes into it.

Designing Performance in an Agile World

I have had several fairly lengthy discussions with architects and design teams which sat on either side of an agile process. If you consider the value prop and requirements for prototyping, measurement, and automation within the solution as part of the non-functional requirements or critical user stories then the steps mentioned above fit easily into both delivery processes.

I think the concept of planning for performance can be broken down logically, just like any other feature. However, I will say that if you wait to add performance as a user story or feature at the end, you will learn a painful lesson. I remember having to add security after the fact into Microsoft Commerce Server. That really hurt our plans.