I like models and I love automation on top of them. Software development in almost any form requires a logical model and an implementation in code. The best developers can find the re-usable patterns or code within a complex system to minimize their work. Functional testing gurus also use this pattern for success.

Anti-Patterns for Coverage

Functional testing is a complicated job. Since software and the connecting solutions can really do anything the mind can imagine, a functional tester must simplify the problem space. Unfortunately, I have observed the following two anti-patterns in defining test coverage.

Requirement Pairing

One flawed approach for functional test coverage is to only correlate a test case for every use case or requirement. This first mistake places the onus upon some other team to create the ‘whole and perfect’ set of requirements. Even if the use cases are very well constructed the functional coverage is limited to the top level design. This type of testing will miss all of the permutations.

Output Focused Coverage without Input Analysis

Another approach is to leverage a code coverage analysis tool. This works through various mechanisms built into the solution under test. This will yield a ‘code coverage’ of the functional test suite. This clearly measurable technique has significant merit, but one clear problem. Since the process only measures the results, the test input pattern could be spaghetti. Any resulting data would not help improve the coverage efficiently. It needs a complementary practice on the front end. Another risk in this pattern is the increasing depth and complexity of modern enterprise applications. In J2EE. A single high level business function often resolves to a Java stack that is 50 or more calls deep. These underlying calls make any code coverage analysis less accurate because the assumption of underlying quality in the stack.

Towards Model Based Coverage

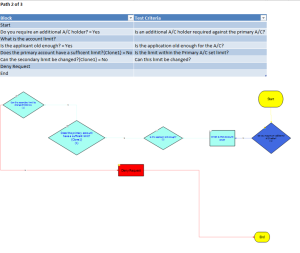

I really like the new test modeling solution from our partner Grid-Tools which leverages one of the simplest modelling techniques in Computer Science. Using the flowchart to describe a system under test is a touch of genius. By laying out a UI or application in Agile Designer, a test engineer quantifies the permutations of nodes and paths. Then the tool will generate an optimized set of cases to reduce the actual test cases from the full set of permutations to one that covers all the nodes and/or paths. Furthermore the model helps drive to a state machine driven evaluation of the system under test which pushes the focus away from ad hoc test case development. Models can become complex but they are inherently simpler than an alphabetical or chronological list of test cases. Challenging the test team to develop and maintain a clarifying model of the system under test is also a great checkpoint for the overall project complexity. Too often test plans are boilerplate documents that are created and never re-used. By making the actual test plans into a living model that generates the test cases the scrum masters and testers can insure that the regression processes are complete.

Click image below to view the larger/readable version.

Designing Test Automation from a Model

Another way to win with this modeling technique is to logically prioritize test automation efforts. After the test cases are quantified you can more easily develop an automation strategy based on planned labor savings. Furthermore, a clean model will simplify the development required to do the test automation. Any test automation without a clear model of the application is illogical. One might suggest a next step where the tool lays out the actual code such as BDD tools Cucumber or Jbehave. I like these concepts too, but I don’t think they save as much time as one might think. Let’s just say that debugging generated code has never been my favorite thing to do.

Adding Data Permutations

When applications are data driven the permutations are not easily enumerated with a flow chart. However this is actually the real value in the AD tool. Since it is part of the Test Data Management suite of tools it accepts data values in the nodes. This is very empowering in several ways. First the data pools can be updated without having to change the flow chart models. This allows a group that is focused upon the data to collaborate more effectively with the functional test teams who own the flow charts. The test data team can create scripts directly within the database to create the data. They can create the best possible data by analyzing the data in production. They can also look at the actual data types within the database to find boundary, edge and error conditions to provide the best test coverage of the RDMS constraints and connecting logic. When you reach this point you are really changing the game. This connects functional coverage with underlying data coverage to achieve a very high rate of coverage during integration validation.

Adding Data Generation Scripts to Continuous Delivery

Where do you go from there? If you have read my other blog posts then you knew I was going to connect this to CD. After you have created a model of your application that hits all the nodes and paths, which also accounts for the critical data permutations. And you have also automated the test cases in your favorite test tools where practical given your sprint cycles and resources. These GT data generation tools are actually programs that you can version control along with the flow chart model, test scripts, test cases and everything. Automate this to align with the latest database updates which are part of the build to create the perfect input and validation data for your extremely well covered system under test.

Other Models

Consider how this correlates to the performance test models we posted earlier on UCML and Creating Requirements. Also cross-reference this to Threat model analysis for security.

By David Guimbellot, Area VP: Continuous Delivery & Test Data Management at Orasi Software